This article is also available as PDF.

Introduction

With the rise of the Internet of Things and the shift from single products to decentralized systems, the functional working of artifacts will be defined for a great part in the digital layer. With the addition of Artificial Intelligence and Machine Learning capabilities, predictive relations are added to the mechanics of designing connected products, with implications for the agency users have in an algorithmic society.

The potential impact on the design space is explored through a design case of an intelligent object becoming a networked object with added predictive knowledge. This chapter introduces what will be the change that predictions will make to the relation of users and contemporary things[1] on a conceptual level and proposes an approach to how to translate this to new activities in designing networked objects.

Defining predictive relations

Predictive relations are the way in which a user builds a relation with the future and produces a mental model of the working of the system. Predictive knowledge seems to unlock a new type of interplay between humans and the world and between humans and non-humans: the functional working of an artifact is now shaped through that interplay — not so much its physical characteristics or the service it provides. Predictive relations are a changing digital condition for our relationship with contemporary things.

The influence of connectedness to the character of an object is explored in different concepts of smart objects, from blogjects, spimes, objects with intent, enchanting objects are some examples [1-4]. The object is static entity though its behavior is defined in the networked capabilities. With the notion of contemporary things objects are defined as constantly changing entities; or fluid assemblages [5]. In exploring predictive relations, the focus is on the relation of the human and the object. To understand this relation the point of departure is the concept of co-performance with the notion of contemporary things as fluid assemblages. In the concept of “co-performance” activities are delegated to a contemporary thing on the basis of the unique capabilities of human and artifact or human and expert system [6].

In decentralized systems, the consequence is that how a contemporary thing is experienced does not depend so much on its physical characteristics or the service it provides but on the relation the user has with the contemporary thing. A smart object defined as a construction of time and space that could understood by the perturbations it makes [7]. The specific functioning is depending on the interplay of the user and the contemporary thing: it is not a fixed state anymore. The lens offered by the notion of fluid assemblages helps to look at artifacts more explicitly as agents within decentralized networks, beyond a narrow focus on matters of user-product experience. The assemblage is here combining material and immaterial resources, and it is conceptualized as fluid because it is assembled in runtime and changes continuously by performing both on the front of the stage and backstage [5]. It adds an extra dimension to the relation as the decentralized network unlocks knowledge about possible futures in the relation with the contemporary thing. This knowledge has an influence on the appropriateness of the delegation that is taking place in the co-performance between the user and the contemporary thing, and on the specific relations that are being shaped in the process. In the future it is expected that the things know more than the user which might lead to asymmetry in the relation [8].

Model of predictive relations – interplay-mental model-distributed network

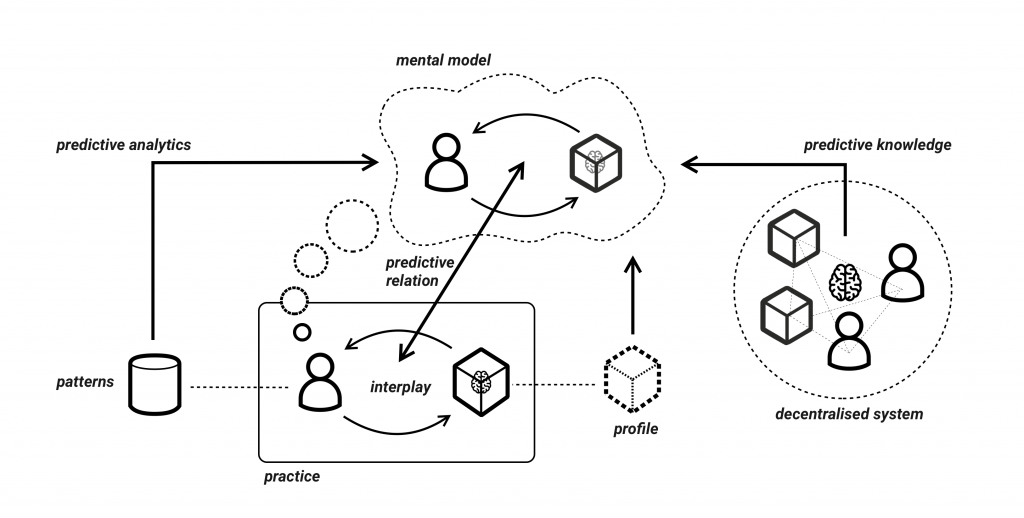

The notion of predictive relations is influencing the design space for designing connected products. The design space is shaped by the perceptions of the human about the interplay, and the mental model is where the relations are shaped and where decisions to interact are made. The mental model is instrumental for a user in using the artifact by making a prescription a user makes before using an artifact [9-11]. The mental model is where designers can intervene to simplify complexity and understand the world, designers create metaphors to extract the mental models [12].

The mental model needs to have predictive power to allow the person to understand and to anticipate. It reflects the beliefs of the user about the system and is not the same as the conceptual model that is shaped by the designer [13]. The gap between the conceptual model and the mental model Norman describes is widened with contemporary things and especially as opaque data from the network is influencing the working of the contemporary thing. The predictive knowledge can contribute to the predictive power of the mental model to anticipate the behavior of the contemporary thing. The predictive knowledge is shaped by three types of predictions on the partaking of the thing in the interplay: (1) Patterns from activities in the past (i.e., predictive analytics), (2) profile from stored rules and data that prescribes behavior and (3) predictions built from similar situations with similar, networked users. The cues from predictions need to be understandable and relatable and it is necessary for us to trust the predictions and adapt our behavior to the predictions [14]. In figure 1 the model of predictive relations is visualized.

Figure 1; visualization of the hypothesis of the working of predictive relations

The design space is shaping the way predictive knowledge can be operationalized for the mental model. Hollan et al. already found that cognitive modeling is not limited to the internal models of the external world, but that cognition is distributed between internal and external processes coordinated on different timescales between internal resources – memory, attention, executive function – and external resources – objects, artifacts, at-hand materials [15]. The mental model can be more on outside connections and can be the place where the embodied relationship between action and meaning is made [16]. The mental model of behavior should be translated in the physical presence and behavior. Shaping the predictive relation requires a different design approach combining adaptive and predictive processes.

A first approach for designing predictive relations

The design of contemporary things with predictive knowledge is a combination of modeling intelligent and predictive behavior. A way to understand the impact of predictive knowledge is to iterate on an already intelligent behaving device rather than starting with a so-called dumb device. In a short exercise with 30 design students this specific question was tested, as they were asked to take an existing intelligent behaving device and add predictive knowledge.[1] If a device could use insights from predictions to operate in the present, it would deliver a different kind of behavior than an adaptive system. In the table this is compared.

| Basis for acting | Sources of knowledge | Results | |

| Adaptive system | Profile for scripted behavior | -Behavior of user in the now-Patterns of stored behavior-Expert knowledge | Updated profile for scripted behavior (in the past) |

| Predictive system | Ruleset for prescriptive behavior | Next to the sources of an adaptive system:-Data of forecasted phenomena (e.g., weather predictions)-Data of similar profiles that are in a different phase of the life cycle | Execution of the rules for present behavior, steering co-performance (in the future). |

In the notion of building predictive relations with the future the predictive knowledge is not seen as predictions in the sense of fortune telling. The key is that the data necessary to use a device is information that is already available in the network and is also been used in a similar use case as the one the interplay of human and device is having. An example is the way AlphaGo as intelligent gaming engine were constructed. By adding a component of self-play the AI was able to use knowledge from a self-generated decentralized network [17].

A design approach for predictive relations is divided in three phases combining a (1) deconstruction of the intelligence of a contemporary thing, (2) adding predictive knowledge to the behavior of the interplay and (3) building this into an engaging relation. These phases are fleshed out more below, using the Nest thermostat to understand what the consequences are for the design of intelligent devices when predictive knowledge.

(1) Understanding the intelligence; working of the intelligent artifact

Starting point is a smart object that performs based on input that is understandable for the human actor. First step is to understand the working of the device, specifically the intelligence that is part of the functionality and in what sense it is part of the core service of the device. Next to an analysis on the level of functionality and interaction the designer should map the sources of data, connection to clouds, the way data flows and is stored in temporary memory or collected for profiling.

Typically, techniques to use come from service design practice combined with system design: service blueprint, customer journeys, dataflows and system design.

Nest is a poster child for the smart home and for the design of intelligent devices. The device is a good illustration of different concepts of smart objects and the Internet of Things and how Machine Learning and Artificial Intelligence play a role in the working of contemporary things. The Nest device is ‘smart’ as the object can sense its context and has access to data and is adapting to these data via a rule-based operation [18]. The Nest device can also be described as an ‘intelligent’ device. Intelligence differs from smart as the device can learn or understand to deal with new or trying situations. The intelligence is of a different sort that emerges from the relation the thing and the human have [19].

The Nest intelligence in practice results in the thermostat logging the moments of adjusting temperatures by the user and translating these timestamps and temperatures in a reference profile for setting the room temperature. Based on the actual presence of the user in the room the rules will be executed, and the temperature will be adjusted along the set rules. The Nest will learn on the number of people in the house that are present and if possible, combine profiles. It can be relatively simple to have different profiles based on the number of people present, it becomes more complex if the actual personal settings per user are combined in the behavior, especially if these profiles have contradicting preferences, think of one person of the household that prefer a temperature of 19 degrees and the other 21 degrees.

The described intelligence is all based on adapting the current situation and knowledge of the users in the household, so it is functioning as a prediction machine [20]. The users can understand why the Nest is behaving like it is what set this behavior apart from predictive relations based on predictive knowledge beyond deductive reasoning. In the next step the designer should explore what this type of knowledge can be.

(2) Envisioning predictive knowledge from the perspective of the artifact

This is a key step. Diving deep into the context of the device and the user to envision what kind of knowledge could be qualified as predictive and how this influence the behavior of the device. This knowledge is per definition hidden from the awareness of the human actor as mentioned before.

This is an explorative exercise that starts with the current intelligence and does a deductive analysis of possible scenarios of unknown known data[1].

The Nest already combines different data sources from different devices and sensor data gathered directly from the physical context. The aim of the device is to adapt the temperature setting to the current situation like the number of people at home and the weather outside. The Nest continuously learns to perfect the HVAC profile (Heating, Ventilation, Air Conditioning). To imagine the Nest adding predictive knowledge it could use data based on the daily routine of the user of the thermostat driving to home from work and adjust the profile to the current predicted situation. The Nest thermostat would actively combine data of other Nest users with similar behavior in the extended network and connect data sources about predicted activities in the future, from friends-of-friends’ agenda, events or weather forecasts.

How this impacts the relation of the user and the device is the next step in designing the predictive relations. It is important to separate this from the functional working of the predictive device system focusing on the relation that is shaped by the injection of predictive knowledge.

(3) Engaging a relation through the predictive behavior

What is the impact of this predictive knowledge on the interplay of user and device? This is an important step to go beyond the functional data and knowledge and set out future behavior of the device. What kind of relation the human actor will have with the contemporary thing to be able to deal with this hidden predictive knowledge? This step defines the predictive relation of human and device.

Thing Centered Design methods like object personas, interview with things [21, 22] are specifically available to explore the engaging of user and device and make the engagement of the artifact and the ecosystem with the user tangible.

To step away from smartness as a human-centered concept and supposing that the artifact has an autonomy in functioning, the co-performance takes the interplay as the center. Human judgement plays a defining role, nevertheless. Without connecting to human emotions, the artifacts will not have a role in social practice. With these complementary capabilities’ artificial performers functions as a category in their own right. Artifacts have their own kind of intelligence in social practice, with predictive knowledge the role an artifact plays in the interplay seems to change. If the agency and intelligence of ‘smart’ artifacts is always taking place in the context of human perceptions the addition of predictive knowledge is influencing these perceptions. Looking at the Nest example, Kuijer & Giaccardi describe how the sleep setting of the Nest is limited to rules based on generic healthy recommendations. The role the Nest takes here in the interplay of the heating system and the human that want to go to sleep is influenced by external influences. In this specific situation the reason can be understood, but what if these rules are defined by predictive knowledge only known in the system at that time? The role of interplay becomes not only an act of social practice but gets a key role in understanding the actual functioning of the product system.

With the (in)ability to adjust the thermostat to the real-life messiness it appears that a new type of smartness for things is needed. Not smart, not intelligent, not even adapting, but reasoning. Drawing conclusions from patterns, combining changing situations and cues why this situation happens. Not by adjusting a profile through intelligent learning alone, but through empathic knowledge combined with specific characteristics. This reasoning “should show sensitivity to the power dynamics involved when different ideas of appropriate practice come together in situated performance. [6]

Building these predictive relations preempts the actual design of the interplay of the human and nonhuman. This results in a specified briefing for designing the interactions of the contemporary thing with the user.

Future research agenda

Understanding of the role of predictive relations in the design of connected artifacts is just the start of the impact predictive knowledge will have on our relation to the world through these contemporary things. An example of current predictive systems are cars with autonomous driving functions, and especially the system of Tesla. In 2016 a Tesla owner ‘driving’ the car on autopilot filmed with his dashcam how his car started to decrease the speed without any clear reason. A few seconds later it was clear though, just before a serious accident happened. The Tesla responded in time to avoid a collision[2]. The system of Autopilot predicted the future here. The driver had delegated the initiative from the human to the car. This principle is referred to as ‘alienation’: we feel disconnected from ourselves as a result of the disconnection we have with the devices we use as the working is more defined in the system than in the interaction. This can even lead to physical unease [23].

Figure 2: model of predictive relations and how the decentralized system is informing user to make decisions (a) or prescribing behavior (b)

The addition of predictive capabilities to the working of the system does not only influence the way the device is operated but seems to influence the perception of the working too, and have an impact on one’s sense of autonomy, trust in the device, etc. [24, 25]. The question is then: what does this mean for the design space of the future designer of connected products? And how to design for things that predict and even prescribe? In a more-than-human world of design and designing, outcomes and experiences are the result of dynamic interplay between people and networked computational things, as well as between things and other things (cf. [26]).Adding predictive knowledge as a driver of behavior of smart objects is adding an extra layer of complexity that needs a further exploration on the effects of the way we design for intelligence. A first step can be to incorporate the three steps in the design process as described with the understanding, envisioning, and engaging of predictive knowledge.

References

1. Marenko, B., Neo-animism and design: A new paradigm in object theory. Design and Culture, 2014. 6(2): p. 219-241.

2. Rose, D., Enchanted objects: Design, human desire, and the Internet of things. 2014: Simon and Schuster.

3. Rozendaal, M., Objects with Intent: A New Paradigm for Interaction Design. Interactions, 2016(May-June 2016): p. 62-65.

4. Sterling, B., Shaping things. 2005.

5. Redström, J. and H. Wiltse, Press Play: acts of defining (in) Fluid Assemblages. Nordes 2015; Design Ecologies, 2015. 6.

6. Kuijer, L. and E. Giaccardi, Co-performance: Conceptualizing the Role of Artificial Agency in the Design of Everyday Life, in CHI 2018. 2018, CHI: Montréal, Canada.

7. Ash, J., Phase Media; Space, Time and the Politics of Smart Objects. 2019: Bloomsbury.

8. Nourbakhsh, I.R., Robot Futures. 2013: MIT Press.

9. Greca, I.M. and M.A. Moreira, Mental models, conceptual models, and modelling. International Journal of Science Education, 2000. 22(1): p. 1-11.

10. Nielsen, J. Mental Models. 2010 [cited 2019 10 July 2019]; Available from: https://www.nngroup.com/articles/mental-models/.

11. Streitz, N.A., Mental Models and Metaphors: Implications for the Design of Adaptive User-System Interfaces, in Learning Issues for Intelligent Tutoring Systems, L.A. Mandl H., Editor. 1988, Springer: New York.

12. Ricketts, D. and D. Lockton, Mental landscapes: externalizing mental models through metaphors. Interactions, 2019. 26(2): p. 86-90.

13. Norman, D.A., Some observations on mental models, in Mental models. 2014, Psychology Press. p. 15-22.

14. Orrell, D., The Future of Everything, the science of prediction. 2007, New York: Basic Books.

15. Hollan, J., E. Hutchins, and D. Kirsh, Distributed cognition: toward a new foundation for human-computer interaction research. ACM Trans. Comput.-Hum. Interact., 2000. 7(2): p. 174-196.

16. Dourish, P., Where the action is: the foundations of embodied interaction. 2004: MIT press.

17. Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., … & Lillicrap, T., A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play. Science, 2018: p. 1140-1144.

18. Schreiber, D., et al., Introduction to the Special Issue on Interaction with Smart Objects. ACM Transactions on Interactive Intelligent Systems, 2013. 3(2).

19. Taylor, A.S., Machine Intelligence, in CHI 2009. 2009: Boston.

20. Agrawal, A., Gans, J., & Goldfarb, A., Prediction machines: the simple economics of artificial intelligence. 2018: Harvard Business Press.

21. Chang, W.-W., et al., “Interview with Things:”

A First-thing Perspective to Understand the Scooter’s Everyday Socio-material Network in Taiwan, in DIS 2017. 2017: Edinburgh.

22. Cila, N., et al. Thing–Centered Narratives: A Study of Object Personas. in Proceedings of the 3rd Seminar International Research Network for Design Anthropology. 2015.

23. Bean, J., Nest Rage. Interactions, 2019. 26(May-June 2019): p. 1.

24. Wiegand, G., et al. I Drive-You Trust: Explaining Driving Behavior Of Autonomous Cars. in Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems. 2019. ACM.

25. Yin, M., J. Wortman Vaughan, and H. Wallach. Understanding the Effect of Accuracy on Trust in Machine Learning Models. in Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. 2019. ACM.

26. Giaccardi, E. and J. Redström, Technology and More-Than-Human Design. Design Issues: history/theory/criticism, 2020.

[1] Referring here to an infamous speech by Donald Rumsfeld, US Secretary of Defense speaking in 2002 in a news briefing, see https://en.wikipedia.org/wiki/There_are_known_knowns, last accessed 6 November 2019

[2] More on the specific event at https://www.engadget.com/2016/12/28/tesla-autopilot-predicts-crash (last accessed 30 July 2019)

[1] In a workshop organized at Master Research Day 2020 by Iskander Smit about 30 design students of Delft University of Technology faculty Industrial Design Engineering in 6 teams worked on this assignment. https://delftdesignlabs.org/news/workshop-master-research-day-2020/

[1] This term indicates a category of descriptions on artefacts that is rooted in the internet and the digital. Cf. Redström and Wiltse (Redström & Wiltse, 2018)