This post is also sent as an update via the monthly newsletter. Around the end of a month, I share learning on the Cities of Things. More on the backgrounds of Cities of Things and the current research projects via the website. In my personal weeknotes newsletter Target_is_new I keep track of the news of the week.

Reading back the weekly updates, it is noticeable that there are continuous introductions of new robot-dog applications, mostly Spot from Boston Dynamics; from policing to being a doctor. The last mile delivery pods are also now adopted by new players every week, so it seems, driven by pandemic lockdowns probably. The pods could see these as typically the creatures inhabiting the cities of things, but it is interesting to reflect on some differences.

From an outside perspective, the way they resemble human characteristics is very different. The last mile vehicles resemble other types of vehicles that we use for transport, while the robot-dogs are a new type of living creatures and touch upon more human-animal interactions often. The robot dogs are also a kind of democratization of industrial robots to a smaller and easier to adopt form factor. The new Stretch robot of Boston Dynamics, introduced last month, is another interesting example. It is positioned as a moveable logistics robot, taking stuff from a truck to the warehouse and vice versa. It is like a combination of a human worker with a tool like a forklift. That make these robots into three types of support connected to human capacities: replacing a delivery person with an autonomous moving bag, replacing the warehouse worker with autonomous lifting gear, and a human guarding or communicating position with an autonomous living object.

All of these are staying close to the objects or tools they replace. That is needed for the acceptance and for the transparency in what they do. Here we touch an important aspect; for acceptance in our regular life we need autonomous operating devices that are readable archetypes. For now.

Will this change? Will we give the autonomous operating citythings more credits for their own character, the authentic choices they might start making. Like the painting robots that create art, that is even sold as NFT. If the autonomous thing is just a predictable extension of human-operated things, there is no character to recognise, and would it be also not likely it will produce interesting art. So might it be a virtue to have non-explainable AI driving these characterful citythings, creating a kind of non-transparent working? In that situation, the relations we have are not are based on our human representations in the autonomous things but in the embodiment of the decisions the robotic things make…

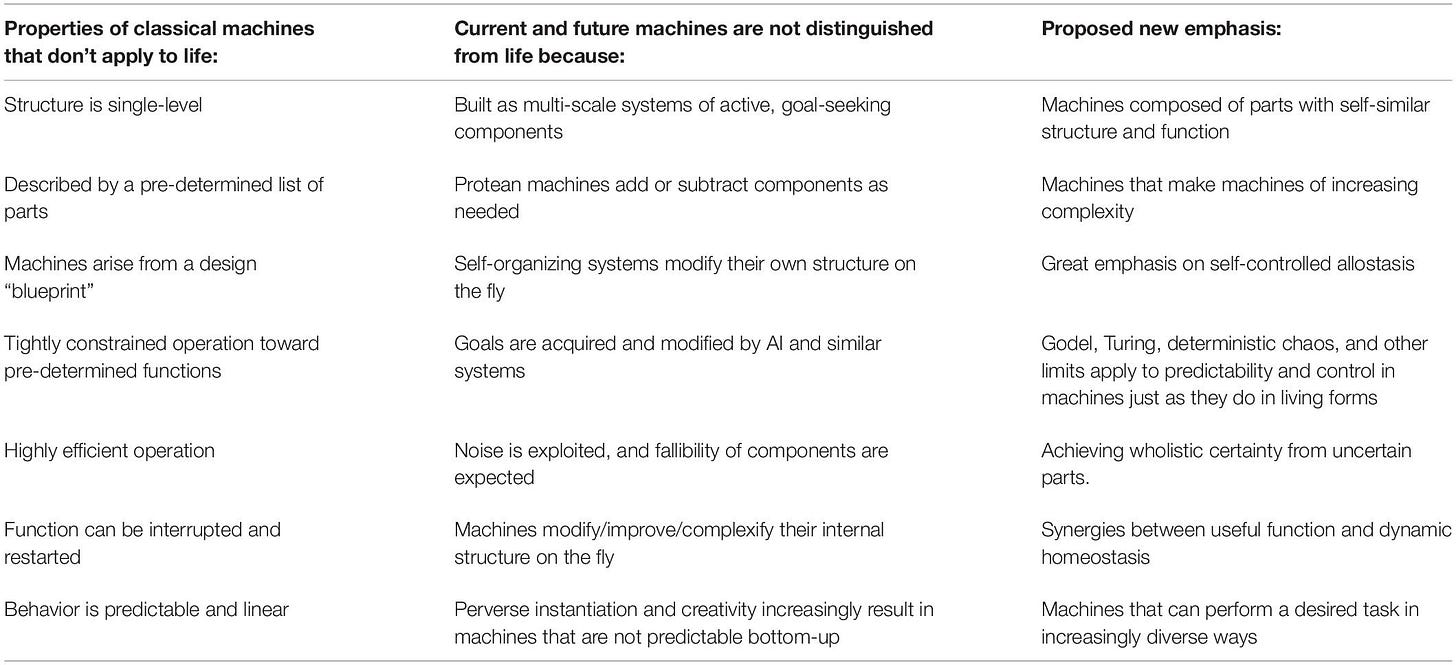

This notion is opening up lots of new questions of course. To connect two interesting reads to dive into it more. First, in her trend presentation at SXSW, Amy Webb introduced the You of Things, where the personal connector is important in the context of networked objects. It is a nice capture of weak signals but deserves also more thinking. The translation into examples is quite basic still. A fundamental exploration on the change of what living things are, was done in this extensive article: “Living Things Are Not (20th Century) Machines: Updating Mechanism Metaphors in Light of the Modern Science of Machine Behavior“. New definitions of machines, robots, programs, software/hardware are proposed.

The science of behavior, applied to embodied computation in physical media that can be evolved or designed or both, is a new emerging field that will help us map and explore the enormous and fascinating space of possible machines across many scales of autonomy and composition.

Interesting to see how it plays out, also in relation to more hybrid systems, especially in a city context. That is something for another edition though.